These 10 Things are Killing Your Survey

Sept. 8, 2018, 9:31 a.m.

If you are using surveys for research, these ten problems may be costing you dearly in time and money.

Need a fast way to become an instant survey design expert? Download our survey design checklist.

Here they are, ten rules for designing a survey:

Rule 0: Don't design a survey

Using a previously published and validated survey can save you hours and hours of time.

Using a previously published tool also adds validity to a research project. This is particularly true when surveys are used as evaluation tools to judge the effectiveness of an intervention.

We encourage careful research and the use of a librarian to hunt down a previously published survey tool and are continually surprised to find how often a previously validated tool is available.

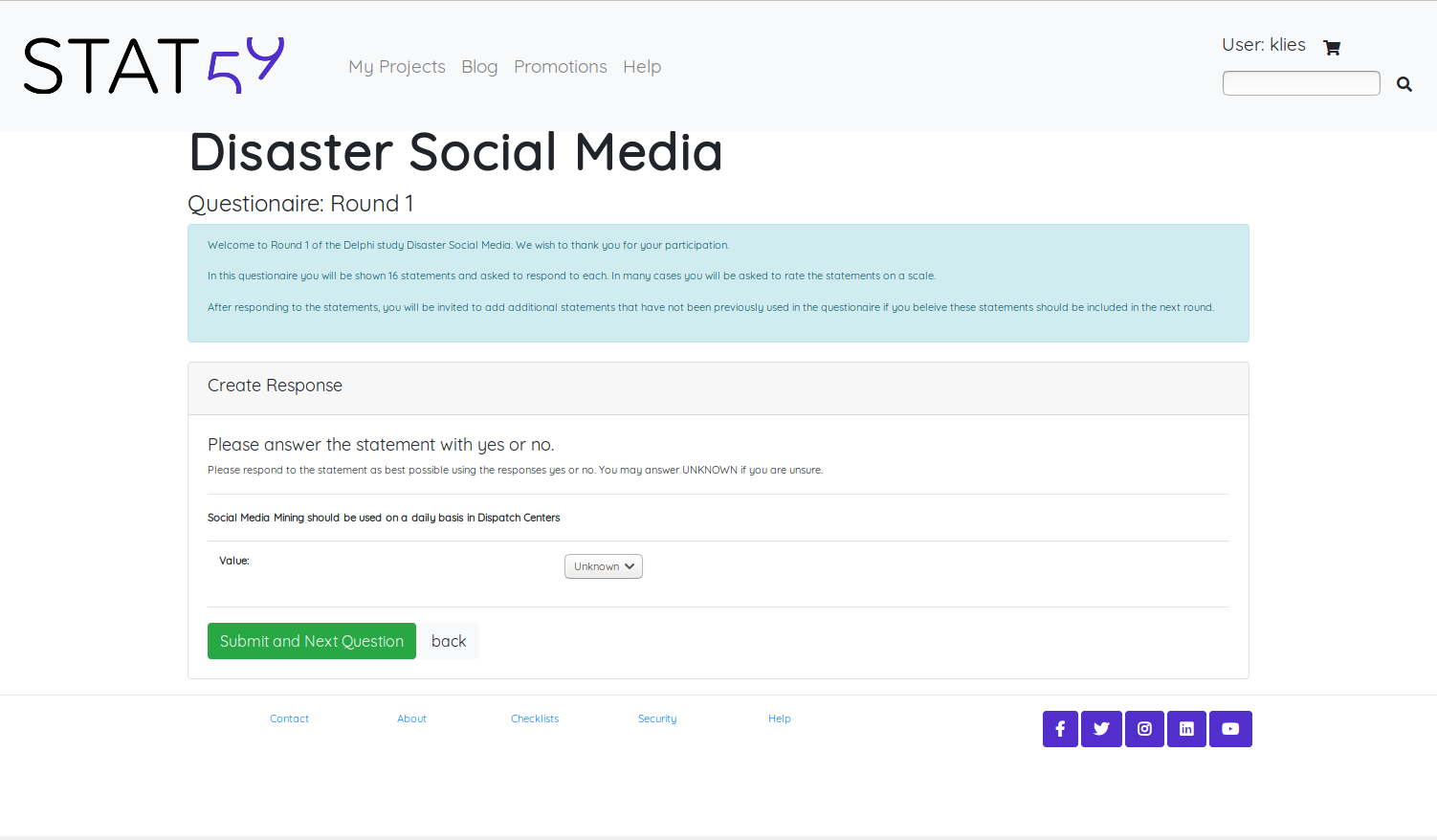

Rule 1: Use an adequate introduction

A poorly written introduction can drive participants away from the survey before they even answer the first question. I suspect all of us at one time have opened and started a survey only to ask ourselves "why am I wasting my time on this?" Most participants will make their decision to complete or ignore your survey in the first few seconds. Surveys need to make a great first impression.

The introduction should tell the participants - in as few words as possible - the following: 1) the purpose of the study, 2) if the study is anonymous, 3) if participation is voluntary, and 4) approximately how long it will take.

Getting the participants to start answering questions quickly is a great way to encourage survey completion. Once participants have invested a few minutes in answering questions they are far more likely continue to completion.The first questions after the introduction should generally be simple and not address complex or sensitive topics. We generally prefer to have the first few questions answered by simple yes/no or short answers. We usually only introduce more complicated questions, such as those involving scales, later in the questionnaire.

Rule 2: Divide the survey into manageable sections

A long, continuous series of questions can easily overwhelm your participants; many will simply give-up. Breaking the survey into smaller tasks, each taking no more than a few minutes, will increase the chance participants will keep going.

Early sections in the survey should be clearly related to the surveys stated purpose, and if possible should proceed in an order meaningful to the participants. This could include grouping questions by topic or by scale type. Sections with difficult or sensitive questions should never appear at the beginning and should if possible be in the middle of the survey.

Questions within each section should start with the familiar, and lead to the unfamiliar. Objective questions should proceed subjective questions.

We tend to group questions by type of scale. Each section includes the instructions for use of the scale and is followed by 5 to 10 questions. We like to ensure that the instructions for scale use are always visible while the scale is being used.

Rule 3: Limit branching

The branching that you design into your survey may seem simple to you, but if participants cannot follow it, spoiled survey and contradictory data can result. Branching can also be very time consuming to the participants, as they may need to read the branching instructions several times to understand how to proceed.

We tend to avoid branching of any type in paper based surveys and use branching only in electronic surveys where it can be made invisible to the user.

Rule 4: Avoid bias in questions

As a researcher you are probably passionate about your work; this same passion can easily creep into your survey questions as bias. Bias enters into our surveys in a number of ways such as leading questions, example containment, overly specific questions, overly general questions, or inappropriate reliance on participant recall. Often being an expert in the field can make it harder to write unbiased questions.

Careful consideration of the language skills and level of knowledge of the survey participants is mandatory. Abbreviations or technical terms misinterpreted by participants can give uninterpretable results.

We tend to favor survey questions that are short, use simple language, and are clearly focused on a single piece of information. Overall we favor clarity over cleverness.

Rule 5: Leave demographics and sensitive questions for the end

Perhaps nothing offends your participants more quickly than starting a survey with immediate demographic questions such as age, gender, level of education, or salary. If you must ask these questions, they should be relegated to the end, when participants have already answered the important questions of the survey body. Participants should also be able to understand from your survey design, WHY these demographic questions are important. If the demographics are optional - as they usually should be - make sure to indicate that in your survey.

We tend to favor obtaining only those demographics strictly necessary for the study objectives. We usually include the demographics in its own well demarcated section at the end of the survey, and clearly label the demographics as optional.

Rule 6: Precode the Survey

Failure to precode paper based surveys at the development stage can make data collection tedious, expensive, and error prone. Although electronic surveys have largely superseded paper based surveys, in many cases paper based surveys are still more convenient for the participants. However, paper based surveys must be transcribed to a data table at some point, and this can be very, very error prone. Paper based surveys should be carefully numbered to ensure that the data can be transcribed easily into the data table.

When using printed surveys, we tend to print the number of the question in light grey at the beginning of the question, for example. Subsequent responses are then code - also in light grey - with the number of the question and letter of the response, for example 6a. This makes the coding unobtrusive to the participant and simple for the data collector.

Rule 7: When using scales, consider how you will analyze before constructing

Choosing the wrong scale is a sure-fire path to a overly complicated analysis. Scales are a very effective way to get quantitative information from the participant, and often contain much more information than yes/no or open ended questions. Scales are also very important for Delphi studies beyond the first round.

Numerous types of scales exist. Categorical scales include discrete choice, checklists, ranking, fixed sum, and paired comparison. Ordinal scales include Likhert, verbal frequency, semantic differential, rating, and comparative. All scales have their pros and cons. Choosing the correct scale involves balancing two - often opposing - forces. First, all scales should be developed with audience in mind. Scales such as ranking and fixed-sum can be difficulty for some users. Secondly, researchers should keep in mind how the data will be represented before creating the scales. For instance, Likhert scales are commonly used, but presenting the results of a Likhert scale can take a lot of space and time. If the questionnaire contains many, many rating questions, it can be much more effective to use another type of scale.

We tend to prefer the use of a semantic differential scale, which can be easily summarized as mean and standard deviation, over Likhert scales which are more verbose to summarize.

Rule 8: Use open ended questions sparingly

Using too many open ended questions is a common mistake: these questions are seductively easy to write but painfully hard to analyze. Good reasons to use open ended questions include a long list of potential responses or the risk of biasing the responses through "example containment" where the responses listed are more limited than the imagination of the participants. Open ended questions are commonly used in focus groups or in the early rounds of Delphi Analysis where the overall goal is quantity of ideas.

A more common, and bad, reason for open ended questions is simply lack of knowledge of how to create a more structured question. Firstly, this may be due to lack of content knowledge in the field. In this case, seeking help from experts in the field may help clarify the intent of the question. Secondly, this may be due to the researcher's inability to structure an unbiased and effective question. In this case seeking help from an expert in questionnaire methodology is advised.

We tend to include open ended questions only when no other alternative exists, such as focus groups or early rounds of Delphi studies when the emphasis is on quantity rather than quality of ideas. When using open ended questions we usually insist that the plan for exactly how they will be analyzed is clearly described before any data collection proceeds.

Rule 9: Use a code book

Converting open ended questions to useful data points can be complicated and error-prone. Although there are a multitude of software solutions using text mining or clustering to analyze open ended questions, in many cases a simple code book will suffice. Using a code book is a simple way to cluster information for analysis.

A code book is a type of dictionary the maps the participants free text to the researchers key words. Let's look at a simple example of a survey that is assessing types of injury in rock-climbing. Assume the survey question is an open ended question asking "What part of your body have you injured?" The responses may include such words as "toenail", "right big toe", and "fifth metatarsal." Recording these responses directly into the data table will make analysis difficult. Instead, the researcher may code all three of these responses as "foot". The word "foot" is written on the top of a page in the code book as a key word. The words "toenail", "right big toe", and "fifth metatarsal" are written on the page under the key word. The next time the words "toenail" or "right big toe" occur, it can be seen bin the codebook as being classified as "foot." Usually, all researchers tabulating the data use the same code book, ensuring that open ended responses are always coded the same.

We tend to favor using a hand-written code book, as data coding usually means already having a few windows open on your computer and adding an electronic code book can be awkward. We also request all data collectors use the same book.

Rule 10: Trial the survey first

After your survey is finalized and sent out to participants is no time to find errors in the survey design. Trialling a survey before going "live" is mandatory. All areas of the survey process should be trialled this includes distribution, data collection, data tabulation, and analysis. Correcting small errors in the survey at the outset, during the design phase, is often simple. Correcting them later can be costly or even impossible.

Rather than asking expert colleagues to look at the survey and "see if it looks okay" we prefer to have these colleagues actually complete the survey as if they were participants. From there we will usually trial the data collection and tabulation of these results to ensure that the final data table is suitable for subsequent statistical analysis.

Golden Rule: Keep it simple

How can all these rules be easily summarized? Just keep it simple. Long complicated surveys are painful for both the participant and the researcher. Think carefully about the information you are obtaining. Cut ruthlessly from your survey any questions that do not directly contribute to the overall goals of the project. Look honestly at the quantity of information you are obtaining: is it realistic to present all this information in a coherent fashion. Usually a few very simple but carefully constructed survey questions will provide far more information than a huge volume of unstructured data.

Are You Ready to Increase Your Research Quality and Impact Factor?

Sign up for our mailing list and you will get monthly email updates and special offers.